By Mark Pattison | Catholic News Service

In Washington, one doesn’t need to go bird-watching with binoculars to spot a rare creature. Instead, all one needs to do is look at the hawks and doves in the Capitol.

Inside, there is an increasingly rare breed: a bill with bipartisan support.

The Kids Online Safety Act will please some in the Senate, where it was introduced in mid-February, because it’s meant to protect kids. Others will be happy because it takes on some of the nation’s biggest tech Goliaths for one of the industry’s Goliath-sized flaws: heedlessness to the point of unwillingness to keep children from being commodified as just another audience.

The measure was introduced jointly by Sens. Richard Blumenthal, D-Conn., and Marsha Blackburn, R-Tenn., after months of congressional hearings into tech and social media firms’ practices relating to minors, as well as media investigations into the harms suffered by young people who spend so much of their time on social media.

The Kids Online Safety Act will augment the Children’s Online Privacy Protection Act, which is now nearly 25 years old and observed largely in the breach by tech companies that had not even been founded when the law was passed.

COPPA defines a minor as anyone age 13 or younger; the Kids Online Safety Act redefines “minor” as anyone age 16 or younger.

Any “commercial software application or electronic service that connects to the internet and that is used, or is reasonably likely to be used, by a minor” is covered by the bill.

“Platforms have a duty to prevent and mitigate” harms to minors, says a summary of the bill, including “promotion of self-harm, suicide, eating disorders, substance abuse, sexual exploitation and unlawful products for minors,” gambling and alcohol among them.

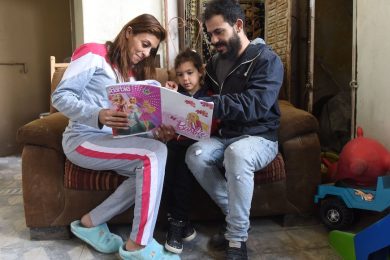

The coronavirus pandemic revealed a lot of weaknesses and inequities in American society. While the “homework gap” suffered by children who had no internet access was one key issue, it was far from the only issue. Kids who did have internet access and increased the time they spent on social media also had their own troubles to deal with. Online bullying, peer pressure and FOMO — fear of missing out — had been exploited over the past two years in ways not imagined before.

The bill requires safeguards to be installed by tech firms to protect minors, and parental controls that can, among other things, track the time spent by kids on platforms, limit purchases made online and address issues of addictive usage.

Under the bill, platforms would have to install a dedicated reporting channel so that minors or their parents can report on harms, and platforms would have to respond to them in a timely manner.

One section of the Kids Online Safety Act focuses on disclosure.

“Prior to registration or use by a minor, the platform shall provide clear, accessible and easy-to-understand notice of the policies, practices and safeguards available for minors and parents,” the 30-page bill’s summary says.

If a platform uses algorithms, it must provide information about how a minor’s personal data is used and offer options to modify it. If it shows ads, the platform “shall provide clear, accessible and easy-to-understand labels for such advertisements, and information about how personal data is used in targeted ads,” the summary says. “Shall” is a good word to keep platform owners from weaseling out on any obligations.

The platform also will have to offer “clear and comprehensive information about the policies, practices, and safeguards available for minors and parents.”

The onus won’t completely be on platforms, though. The bill requires the National Institute of Standards and Technology conduct a study evaluating the most technologically feasible options for developing systems to verify age at the device or operating system level.

Further, it requires the Federal Trade Commission to establish guidelines for platforms seeking to conduct market- and product-focused research on minors. Moreover, the FTC and state attorneys general will have enforcement power.

COPPA has come under criticism for giving the FTC enforcement powers rather than granting regulatory authority to another agency such as the Federal Communications Commission.

In an online world that changes so much so quickly, the horse could be far from the open barn door by the time the FTC gets around to enforcement. But with state attorneys general also on the case, either in the tech companies’ home state or the state where the harm is alleged to have occurred, it could keep some abuses from being committed in the first place.

Mark Pattison is media editor for Catholic News Service.